The system update brings Apple Intelligence to a few iPhone models, but here are the newest AI features that you will most likely use every day.

Apple teased and promised most of the features of Apple Intelligence since the technology hit the market earlier this year, so now that iOS 18.1 has rolled out, we can finally get a taste of Apple’s AI ambitions.

Read Also: Oops, Apple approved another illegal streaming app

And though the first wave of features is somewhat limited in scope, you are bound to find at least some of them useful. After running the iOS betas with access to Apple Intelligence for several weeks, I think these are the three features you’ll actually use on a day-to-day basis.

An iPhone 15 Pro, iPhone 16, or an iPhone 16 Pro (with the Plus and Max variants) on iOS 18.1, and, importantly, you have to request Apple Intelligence access to actually use these features.

Read Also: Start using Apple Intelligence with these 6 new productivity hacks

Once inside, here is what you can realistically expect. Over time, more features will be added — and don’t forget that Apple Intelligence is officially still beta software — but this is where Apple starts its AI age.

Summaries bring TL;DR to your correspondence

In an age of so many competing demands for our attention and apparently limited time to really dig in on bigger subjects…Pardon, what was my point?

Read Also: How to get Apple Intelligence on iPhone, iPad, and Mac

Oh, right: How many times have you wished you had a “TL;DR” version of not just long emails but the firehose of communication that blasts you? The ability to summarize notifications, Mail messages, and web pages is probably the most pervasive and least intrusive feature of Apple Intelligence yet.

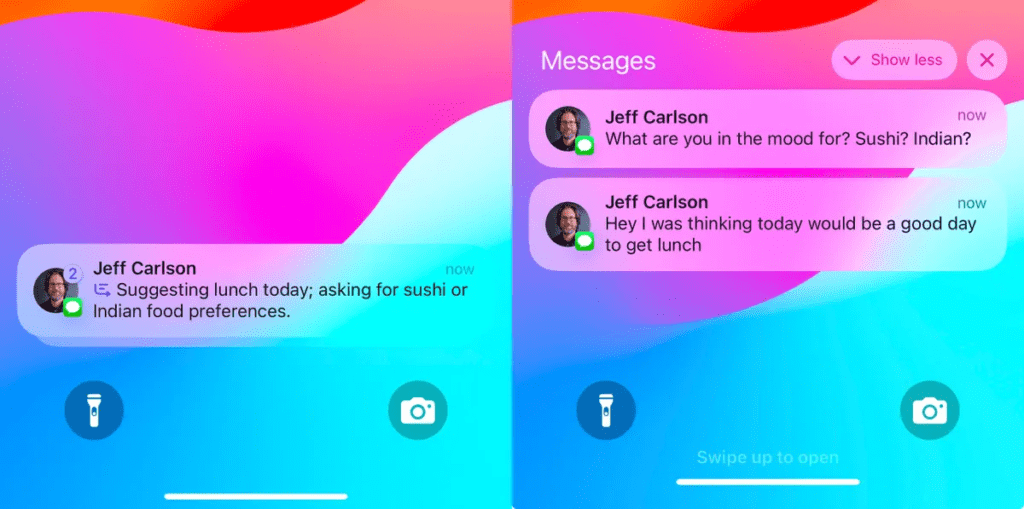

When a message arrives-like a text from a friend or group in Messages, for example-the iPhone writes a short, one-line summary.

Read Also: Apple released iOS 18.2 to change default apps including messages and calls

Sometimes they are ambiguous, sometimes unintentionally funny, but thus far, I have found them more useful than not. Alerts can also be generated by summaries using third party apps like news or social media apps–although I suspect my outdoor security camera is picking up multiple passersby over time and not telling me that 10 people are stacked by the door.

The above said, Apple Intelligence does not get sarcasm or colloquialisms; you may disable summaries if you find it annoying.

Read Also: Apple Pushes watchOS 11.1 update to public primarily focusing on bug fixes

You also can create a more wordy summary of emails on the Mail app: On the top of a message tap the Summarize button to see the text content in a few dozen words.

Read Also: Apple Reportedly Is Considering an iPhone-Powered Vision Device

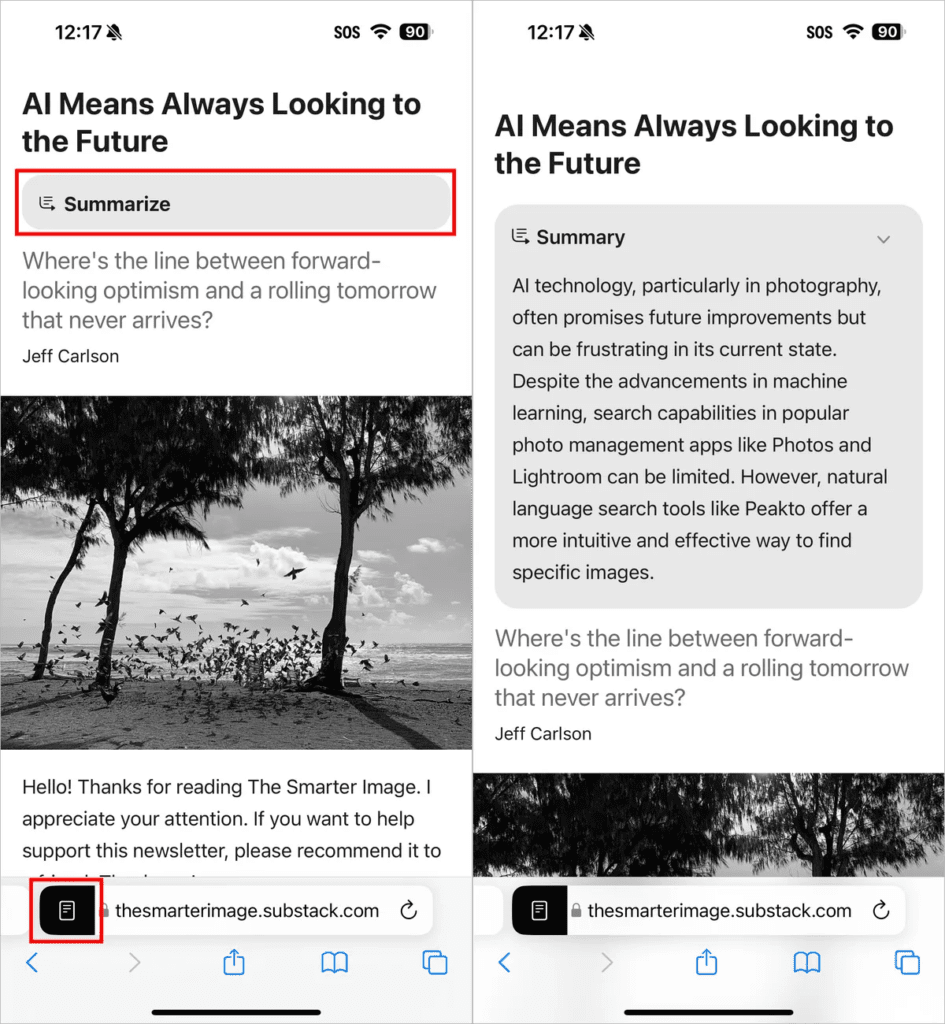

Open Safari and go to a webpage that supports the Reader feature. Tap the Page Menu button in the address bar, tap Show Reader, and then tap the Summary button at the top of the page.

Siri gets a glow-up and better interaction

I was amused by the launches of iOS 18 and iPhone 16 that the most significant visual cue of Apple Intelligence — the full-screen, color-at-the-edges Siri animation — did not make an appearance. Apple even lit up the edges of the massive glass cube of its Apple Fifth Avenue Store in New York City like a Siri search.

Read Also: These glasses could become more popular than the Apple Vision Pro

Instead, iOS 18 used the same-old Siri sphere.

Well, Siri now has the modern Siri look in iOS 18.1, but again, only on Apple Intelligence-supported devices. And those who are still waiting patiently in the Apple Intelligence waitlist queue will see a Siri sphere for now as well.

Read Also: Apple upgrades TestFlight with redesigned invites, testing criteria, and more

Some Siri interaction improvements include a new look, as I mentioned above, which offers much forgiveness in a flubbed query-actually say the wrong word, interrupt yourself mid-thought. It is also far better at listening after giving you its results so that you can ask followup questions that are related to what you’re doing there.

However, answering based on what Apple knows about you is still way down the road. Another feature that’s not ready yet is that iOS 18.1 does not yet integrate ChatGPT as an alternative source of information — that integration has just rolled out in the iOS 18.2 developer beta.

Read Also: Apple Releases watchOS 11.1 tvOS 18.1 and Vision OS 2.1 For All Compatible Devices

Remove distractions from your pictures using Clean Up in the Photos app

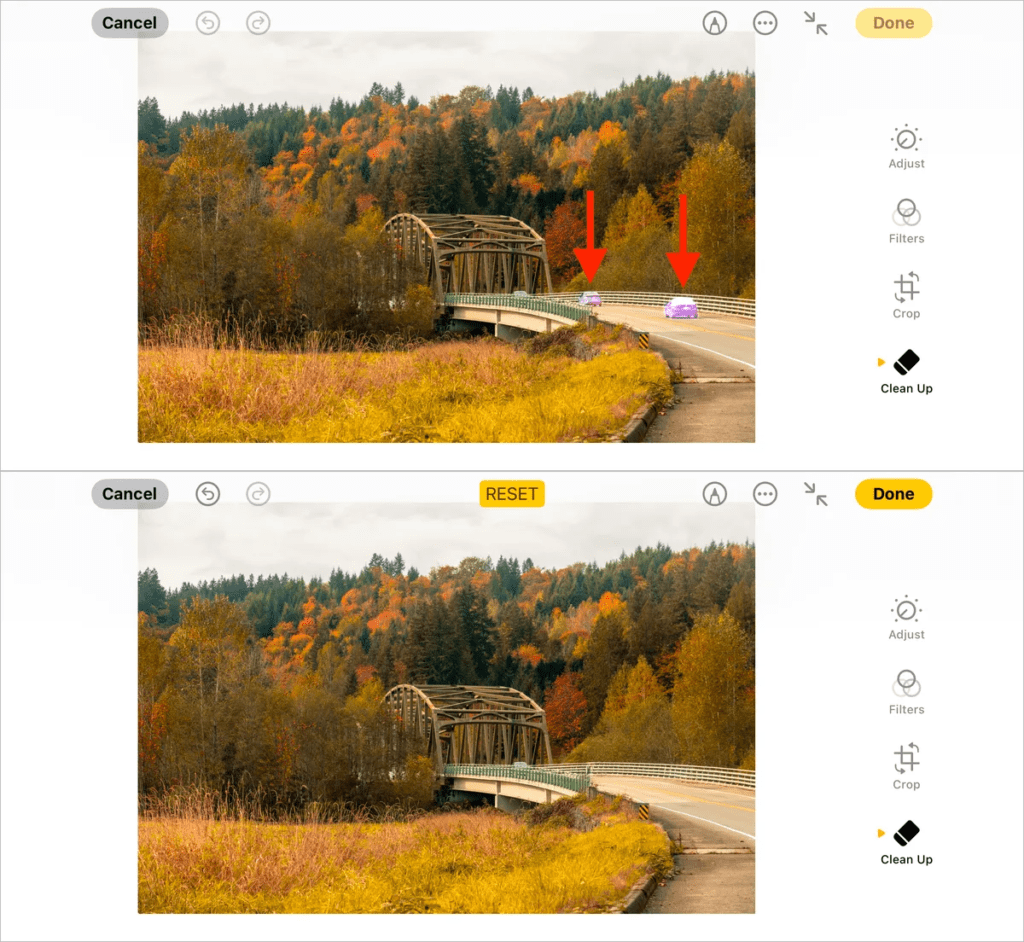

Until iOS 18.1, the Photos application on the iPhone and iPad lacked a simple retouch feature. Dust on your camera lens? Litter on the ground? Forget about it-you’re gonna have to live with them and all the other annoying distractions in the Photos application on MacOS or third party applications.

Read Also: Human scientists are still better than AI ones – for now

Now, Clean Up is also added to Photos from Apple Intelligence. Suppose you want to edit some photo; then you’re presented with a Clean Up button that, when touched, analyses the image itself and starts pointing out something to delete. You select an item or draw with your finger a circle across some part of the picture-the app erases it all and replaces those erased sections with the pixels of probability by means of generative AI.

Read Also: Google Play’s upcoming update could make sideloading apps much easier

In this first iteration, Clean Up isn’t great, and you often will get better results in other dedicated image editors. Still, for quickly clearing a photo of annoyances, it’s fine.