Quantum computers, which paintings the use of the atypical guidelines of quantum mechanics, could soon resolve issues which might be impossible for today’s technology. While we’re not there yet, quantum computing has made huge strides since it started. What started out as a theoretical concept is now a multi-billion-dollar industry, and it continues to develop.

Here’s a take a look at 12 important moments within the records of quantum computing that helped shape this thrilling area.

1980: The Birth of Quantum Computing

In the Seventies, scientists commenced thinking about how quantum mechanics (the science of very small particles) may connect to information principle (the science of facts and computing). In 1980, American physicist Paul Benioff proposed the primary concept for a quantum computer. He described a quantum model of a “Turing machine,” a theoretical model of a computer invented via Alan Turing. Benioff confirmed that this kind of gadget may be defined the usage of quantum mechanics, which laid the muse for quantum computing.

In 1981, Benioff and the famous physicist Richard Feynman gave talks on quantum computing at the primary Physics of Computation Conference. Feynman cautioned that because the universe operates underneath quantum rules, we would need quantum computer systems to simulate it accurately. His concept of a “quantum simulator” helped gasoline hobby in quantum computing.

1985: The Universal Quantum Computer

One of the basic ideas in pc science is the “typical Turing device,” which could solve any hassle a normal pc can. In 1985, physicist David Deutsch improved in this concept. He mentioned that classical (regular) computer systems use classical physics, in order that they cannot simulate quantum procedures. Deutsch introduced the idea of a “universal quantum computer,” which could simulate any physical process, including quantum ones. This was a major step in proving the power of quantum computers.

1994: The First Practical Use for Quantum Computers

Quantum computers promised great potential, but no one had yet found a real-world application for them. In 1994, mathematician Peter Shor changed that. He introduced a quantum algorithm that could quickly factor large numbers—a process that becomes very difficult as the numbers get larger. This is important because factorization is the basis for many encryption methods. Shor’s algorithm showed that quantum computers could break encryption schemes much faster than classical computers, which led to a major push for developing new, “post-quantum” cryptography.

1996: Quantum Computing Tackles Search Problems

In 1996, Bell Labs pc scientist Lov Grover added another critical quantum algorithm. This one targeted on solving “unstructured search” issues, where statistics isn’t prepared in any unique manner. Classical computers take a long time to look through huge datasets, however Grover’s algorithm, using the quantum phenomenon of superposition, sped up the process extensively. This changed into any other clean example of the way quantum computing ought to outperform classical computers.

1998: The First Demonstration of a Quantum Algorithm

Coming up with quantum algorithms is one issue, but getting them to paintings on real hardware is a lot more difficult. In 1998, IBM researcher Isaac Chuang and his team made a breakthrough through strolling Grover’s set of rules on a quantum computer with two qubits (quantum bits, the fundamental gadgets of quantum facts). Just three years later, in 2001, Chuang’s crew applied Shor’s set of rules on a quantum computer, factoring the quantity 15 the use of a seven-qubit processor. These experiments had been small however proved that quantum algorithms may want to paintings in actual existence.

1999: The Birth of the Superconducting Quantum Computer

Quantum computer systems depend upon qubits, and those may be made the use of one of a kind bodily systems. In 1999, researchers at Japanese organization NEC created qubits the use of superconducting circuits, which might be circuits that have 0 electric resistance at very low temperatures. This technique of making qubits can be managed electronically and has on account that become the maximum popular method. Today, important corporations like Google and IBM use superconducting qubits in their quantum computers.

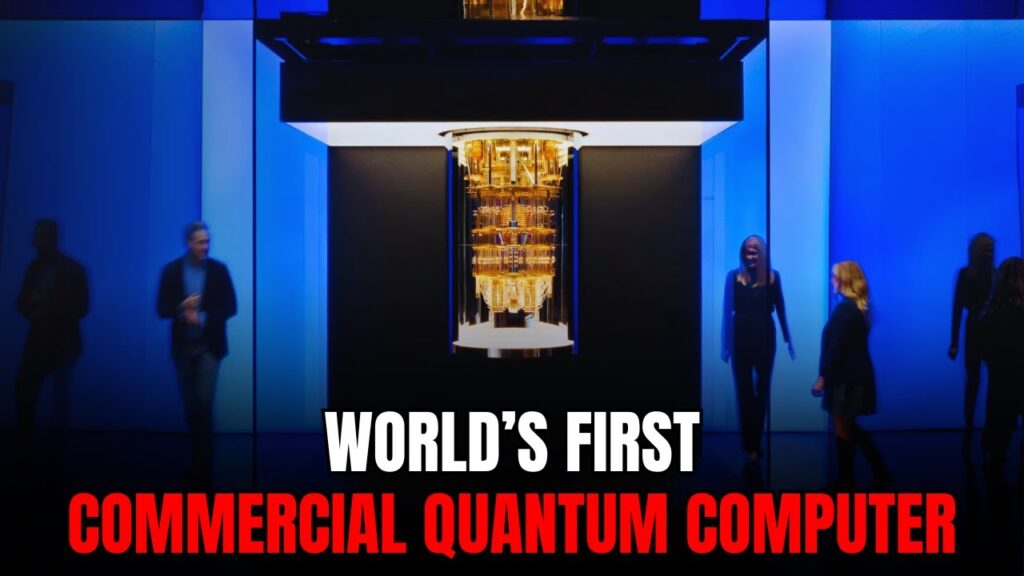

2011: The First Commercial Quantum Computer

For a long time, quantum computing became mainly a area of educational studies. But in 2011, the Canadian organisation D-Wave released the first commercially to be had quantum computer. The D-Wave One featured 128 qubits and price approximately $10 million. However, it wasn’t a “general” quantum pc. Instead, it used an method referred to as “quantum annealing” to resolve specific optimization troubles. While there wasn’t a good deal evidence that it became quicker than classical computers, it marked the start of the quantum computing industry.

2016: IBM Puts Quantum Computing on the Cloud

Until 2016, only a few groups had access to quantum computer systems, and it became tough for researchers to get hands-on enjoy with the era. That modified while IBM made its 5-qubit processor to be had over the cloud. In May of that 12 months, they launched the IBM Quantum Experience service, allowing each person to run quantum computing jobs on line. Within two weeks, greater than 17,000 human beings had signed up, making it a major second in starting up quantum computing to the arena.

2019: Google Claims “Quantum Supremacy”

In 2019, Google made a big claim: they stated they had achieved “quantum supremacy” the moment while a quantum laptop solves a hassle faster than a classical laptop. Using 53 qubits, Google’s processor achieved a complex calculation in 2 hundred seconds, which they anticipated would take a supercomputer 10,000 years. The hassle had no sensible use, but it became a proof of idea that quantum computer systems ought to solve positive troubles lots quicker than classical computer systems.

2022: A Challenge to Google’s Claim

Google’s claim of quantum supremacy wasn’t accepted by everyone. IBM and other critics argued that the problem wasn’t as difficult as Google made it seem. In 2022, a team from the Chinese Academy of Sciences showed that they could simulate Google’s quantum operations in just 15 hours using a classical algorithm on 512 GPU chips. They even suggested that with a large enough supercomputer, they could do it in seconds. This was a reminder that classical computing still has room for improvement, and that the “quantum advantage” remains a moving target.

2023: QuEra Sets a Record for Most Logical Qubits

One of the biggest challenges in quantum computing is that qubits are error-prone. To fix these errors, you need to create “logical qubits,” which are immune to errors and can perform reliable operations. In 2023, Harvard researchers working with the start-up QuEra made a major breakthrough by generating 48 logical qubits at once. This was 10 times more than anyone had achieved before. They were able to run algorithms on these logical qubits, moving one step closer to error-free quantum computing.

These 12 moments highlight the incredible progress made in quantum computing over the past few decades. From theoretical ideas to real-world applications, quantum computers are slowly but surely becoming a reality, with the potential to revolutionize many areas of technology in the future.